Implement a simple ReAct Agent using OpenAI function calling

Simple example of using OpenAI Function Calling in a ReAct Loop .

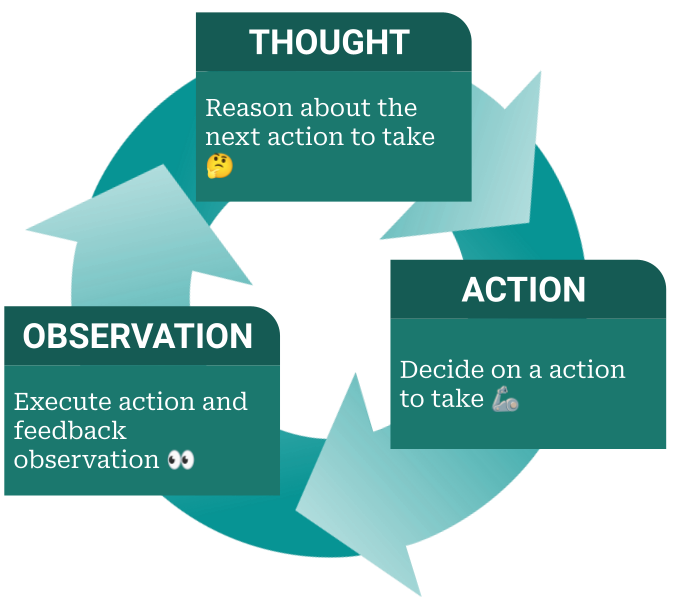

ReAct Loop

The ReAct loop interleaves reasoning and acting in a thought-action-observation loop. It has been quite popular and successful as a foundation for building simple LLM-based agents, allowing the agent to iteratively solve a multi-step task.

I explored integrating the ReAct loop with a Python REPL and embedding-based function search in a previous post titled "ReAct REPL Agent" . In this post we revisit the ReAct loop, but this time using the OpenAI Function Calling API. I'll provide a simple example of how to easily setup a ReAct loop using the OpenAI Function Calling API to perform the actions.

OpenAI Function Calling

The OpenAI Function Calling API allows us to have an LLM-based agent call functions. Based on a set of pre-defined functions that we'll provide, and some context, the LLM will select a function to call and provide the arguments to the function. We then have to call the function ourselves and provide the result back to the LLM Agent.

# Imports

import json

import requests

from collections.abc import Callable

from typing import Annotated as A, Literal as L

import openai

from annotated_docs.json_schema import as_json_schema # https://github.com/peterroelants/annotated-docs

# Setup OpenAI Client

# Get your OpenAI API key from https://platform.openai.com/

openai_client: openai.OpenAI = openai.OpenAI(api_key="<your-api-key-here>")

Agent implementation

As an example, we'll implement a simple agent that can help us find the current weather for our current location.

Defining the functions

Let's start by defining a few simple example functions that we can call. The functions the agent can call are:

-

get_current_location: To find the current location of the agent (Found based on the IP address). -

get_current_weather: To find the current weather for a given location. -

calculate: To help calculate any conversion between units. -

finish: To finish the task and formulate a response. We let thefinishfunction raise aStopExceptionso we can easily detect when the agent is done.

I'm making use of Python's

typing

library to annotate the function arguments with extra information useful for the LLM. We'll specifically make use of

typing.Annotated

to provide extra information about the arguments, and

typing.Literal

to specify the possible values for the arguments.

class StopException(Exception):

"""

Stop Execution by raising this exception (Signal that the task is Finished).

"""

def finish(answer: A[str, "Answer to the user's question."]) -> None:

"""Answer the user's question, and finish the conversation."""

raise StopException(answer)

def get_current_location() -> str:

"""Get the current location of the user."""

return json.dumps(requests.get("http://ip-api.com/json?fields=lat,lon").json())

def get_current_weather(

latitude: float,

longitude: float,

temperature_unit: L["celsius", "fahrenheit"],

) -> str:

"""Get the current weather in a given location."""

resp = requests.get(

"https://api.open-meteo.com/v1/forecast",

params={

"latitude": latitude,

"longitude": longitude,

"temperature_unit": temperature_unit,

"current_weather": True,

},

)

return json.dumps(resp.json())

def calculate(

formula: A[str, "Numerical expression to compute the result of, in Python syntax."],

) -> str:

"""Calculate the result of a given formula."""

return str(eval(formula))

Providing the function specifications to OpenAI

OpenAI function calling requires us to provide the function specifications to the API as a JSON schema .

I'm using the

as_json_schema

function from

annotated-docs

to convert the function annotations to JSON schemas describing the functions.

as_json_schema

leverages the

typing

annotations to provide extra information about the arguments to the LLM.

# All functions that can be called by the LLM Agent

name_to_function_map: dict[str, Callable] = {

get_current_location.__name__: get_current_location,

get_current_weather.__name__: get_current_weather,

calculate.__name__: calculate,

finish.__name__: finish,

}

# JSON Schemas for all functions

function_schemas = [

{"function": as_json_schema(func), "type": "function"}

for func in name_to_function_map.values()

]

# Print the JSON Schemas

for schema in function_schemas:

print(json.dumps(schema, indent=2))

QUESTION_PROMPT = "\

What's the current weather for my location? Give me the temperature in degrees Celsius and the wind speed in knots."

Run the ReAct loop

The ReAct loop builds up the context iteratively, taking one step at the time. Using the

OpenAI Chat Completions API

the messages, function calls, and function call results are all appended to the

messages

list. The full

messages

list is then used as the context for the next step.

Each step of the ReAct loop consists of the following steps:

-

Send the current context of

messagesto the LLM Agent and get a response. -

Append the response to the

messageslist. -

For each function call in the response, call the function and append the result to the

messageslist.

Let's run our question through the ReAct loop and see what happens.

# Initial "chat" messages

messages = [

{

"role": "system",

"content": "You are a helpful assistant who can answer multistep questions by sequentially calling functions. Follow a pattern of THOUGHT (reason step-by-step about which function to call next), ACTION (call a function to as a next step towards the final answer), OBSERVATION (output of the function). Reason step by step which actions to take to get to the answer. Only call functions with arguments coming verbatim from the user or the output of other functions.",

},

{

"role": "user",

"content": QUESTION_PROMPT,

},

]

def run(messages: list[dict]) -> list[dict]:

"""

Run the ReAct loop with OpenAI Function Calling.

"""

# Run in loop

max_iterations = 20

for i in range(max_iterations):

# Send list of messages to get next response

response = openai_client.chat.completions.create(

model="gpt-4-1106-preview",

messages=messages,

tools=function_schemas,

tool_choice="auto",

)

response_message = response.choices[0].message

messages.append(response_message) # Extend conversation with assistant's reply

# Check if GPT wanted to call a function

tool_calls = response_message.tool_calls

if tool_calls:

for tool_call in tool_calls:

function_name = tool_call.function.name

# Validate function name

if function_name not in name_to_function_map:

print(f"Invalid function name: {function_name}")

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": f"Invalid function name: {function_name!r}",

}

)

continue

# Get the function to call

function_to_call: Callable = name_to_function_map[function_name]

# Try getting the function arguments

try:

function_args_dict = json.loads(tool_call.function.arguments)

except json.JSONDecodeError as exc:

# JSON decoding failed

print(f"Error decoding function arguments: {exc}")

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": f"Error decoding function call `{function_name}` arguments {tool_call.function.arguments!r}! Error: {exc!s}",

}

)

continue

# Call the selected function with generated arguments

try:

print(

f"Calling function {function_name} with args: {json.dumps(function_args_dict)}"

)

function_response = function_to_call(**function_args_dict)

# Extend conversation with function response

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response,

}

)

except StopException as exc:

# Agent wants to stop the conversation (Expected)

print(f"Finish task with message: '{exc!s}'")

return messages

except Exception as exc:

# Unexpected error calling function

print(

f"Error calling function `{function_name}`: {type(exc).__name__}: {exc!s}"

)

messages.append(

{

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": f"Error calling function `{function_name}`: {type(exc).__name__}: {exc!s}!",

}

)

continue

return messages

messages = run(messages)

Looking at the output it seems like the agent is able to answer our question in a few steps.

Let's look at the full context of messages to see which functions were called and what the results were:

for message in messages:

if not isinstance(message, dict):

message = message.model_dump() # Pydantic model

print(json.dumps(message, indent=2))

Looking at the full list of messages we can see that the agent has answered our question by:

-

Finding the current location of the agent using the

get_current_locationfunction. -

Getting the current weather for the location using the

get_current_weatherfunction. -

Converted the wind speed from km/h to knots using the

calculatefunction. -

Finished the task with a final answer by calling the

finishfunction.

This post at peterroelants.github.io is generated from an IPython notebook file. Link to the full IPython notebook file